I have worked with several different Sitecore implementations, each one is unique in it’s own way. Teams are different, responsibilities are different, people – definitely different 😉 Managed cloud is also subtly different when it comes to few mundane chores that you may have done differently in the past. With managed cloud, it is a partnership between Clients, Implementation partners and yes Sitecore Managed Cloud team. So, we got to keep each other posted, it is definitely challenging and need to be well planned out, especially on tight timelines like in our case.

I must have logged around 50 tickets as of my last count when I combine traditional Sitecore tickets and Managed Cloud tickets. In retrospect, though it feels like I spammed Sitecore, some of the questions were purely understanding the responsibility and where to draw the lines between all the teams involved. Documentation does not call this out unfortunately! Also, typical circle back time noted on these type of support tickets is 3 days which a great percentage for a one sprint go live project like ours. lol So, the effort from my end was to log ticket and keep working on the side to figure it out as we wait for Sitecore team to respond.

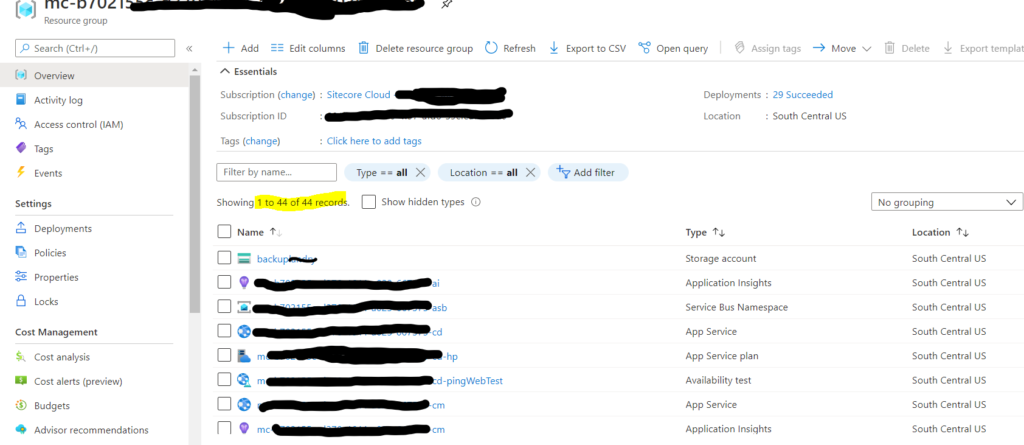

I will start with easy ones first. So, as we started building more confidence from code and content perspective, especially when we were ready to hand off our features for content load, it was super important to ensure restore is turned on and is per our requirements in terms of backup frequency and retention based on environment in question. These are handled by Sitecore Managed Cloud team via service cloud request creation.

Backup Schedule for Azure App Services

Use this request to back up your app services, basically code/configs and pretty much file system. I ensured I requested this for example on production, daily as back up frequency and week for retention. You can relax the requirement if its an environment that is less of a concern.

Backup Schedule for Azure SQL databases

Use this request to back up your databases, your content and anything in Sitecore basically. For our production instance, I requested backup for core, master and web with backup frequency daily and retention for 4 weeks. Usually, this type of retention should be enough for most of the solutions.

Quick Tip : As a perfectionist (at least I would like to call myself that lol), I wanted to ensure all health checks noted here pass on all my CM and CD(web roles) instances per documentation out there, but, it turns out that it is okay for /healthz/ready to fail and it does not mean anything. So, if you see your instance giving “Unhealthy” status on these url’s, do not fret, you did nothing wrong as long as /healthz/live is good on CM and CD. It turns out /healthz/ready check is more for other roles such as processing or reporting for instance. Documentation could be out of date, but, hope this saves you some time.

Need a custom domain to ensure your url’s on lower environments and production are end user friendly and not hash looking ones that come with initial set up? Sitecore Managed Cloud team does not own this, its you and probably client team depending on the specific scenario. Below links might help:

https://docs.microsoft.com/en-us/azure/static-web-apps/custom-domain

https://docs.microsoft.com/en-us/azure/app-service/configure-ssl-bindings

SOLR

Can not answer this with confidence. But, Sitecore team said different things in regards to this on different tickets. But, one thing I clearly remember was when I debugging an issue which was related to indexes, I was very tempted to kick the SOLR and restart it. Typically, if your team owns infrastructure, you can login on Putty or Powershell and run some quick commands. Luckily, issue got resolved and I did not need to perform this step, but, Sitecore team did mention, I had to create a ticket if I need to do this. Also, Sitecore team sets SOLR up when they set up brand new resource group per contract, so, I guess it’s mostly Sitecore that owns SOLR. Only access we had was admin console of SOLR and to be honest, there are so many things you can’t do using the console. Need more command power when it comes to SOLR.

Security Hardening, Session Set up and Tuning

Wondering who would do the whole nine yards – performance tuning, hardening, session state, etc., etc., I did too. When I reached out to Sitecore, they said that is going to be me. I was bummed, why doesn’t this come like already set up was my first thought.

But, since I got the confirmation, I started full speed only to find out, the hard way that session setup was already done by Sitecore teams lol. Just so you do not waste your time understanding behind the scenes what are the correct decisions for out of proc session and so on. By default, CD roles are set to use out of proc session state with Redis as provider. So, no action was needed by me to set anything related to session. Crossed that off my list.

Now coming to security hardening, I noted that some were already in place and done by Managed cloud. How ever most were not done, so, I encourage the regular drill of going over the stuff we typically do noted here for experience platform security chores. I have some detailed notes with me if some one is curious, reach out!

Almost forgot, for tuning none of the old rules apply for Azure SQL. So, it is important to give these links below a read and do what you can:

https://doc.sitecore.com/developers/100/sitecore-experience-manager/en/monitoring-and-maintaining-azure-sql.html

https://kb.sitecore.net/articles/909298

I could actually do a bunch mentioned up on the links above and some are actually again already done. 🙂

I remember also having to do this one – https://kb.sitecore.net/articles/858026 to ensure I overcome a pesky error such as below that kept creeping in my logs. After I did change the value to suggested error did go away.

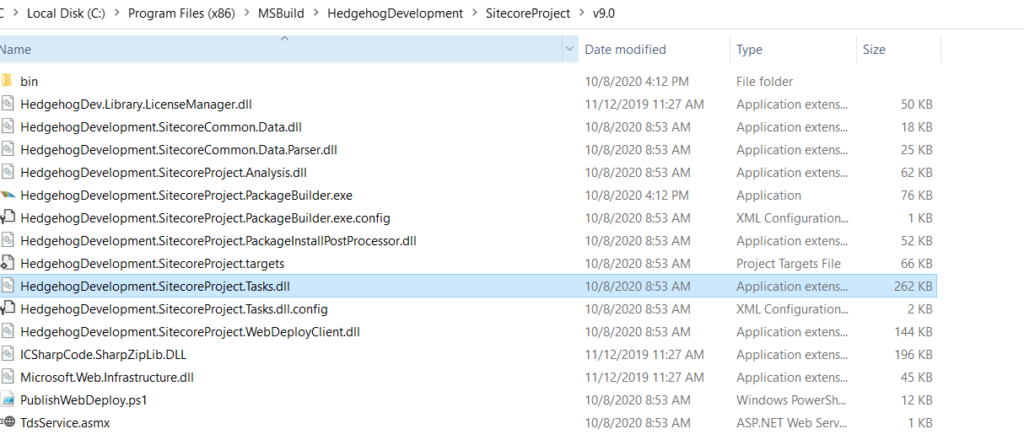

Whitelist Authoring

This is actually related to some of the steps we need to do on security hardening when authoring is exposed publicly. If authoring is not exposed out there, you can relax some of those requirements, again Sitecore recommends we do those steps regardless, so, I do not want to give incorrect recommendation. But, having only finite set of people get to authoring is much secure anyway. To do this, I read documentation out there, but, was mostly confused, Sitecore Cloud team was sweet enough to actually do this chore for us. Once they actually did it, I figured it was not as complicated as it sounded on documentation. Basically run a PowerShell script it is on documentation to fetch what authoring would need to talk to and whitelist all of them as outbound addresses on CM app service. Plus, they actually added outbound IP addresses of reporting in SQL server firewall. I would still think its smart to ask Sitecore team to do this. You are good with this set up until your app service is not scaled up or down or change the pricing tier in which case. So far, it’s been a month and we see no issues with initial set up done.

Another important thing to note, we have to add authoring IP also on outbound addresses on CM app service, this is because we noted that a request back to authoring from authoring server was not being processed and blocked with forbidden message lol. To avoid this, I had to add couple more outbound IP addresses to list already existing, it was not fun because I had to manually add a bunch more.

Debugging Tricks

Last section of this blog, but, definitely most important one. Minus this knowledge, I felt crippled. It was super hard to keep track of logs using Kudu, timestamps are not right and you never know if you are looking at correct log file. So, I recommend even if it takes couple extra minutes to review latest logs at all times follow steps here

I was so much more confident once I got the handle over latest logs in terms of debugging issues. Also, make sure your app settings are correct so Application Insights always receives the logs properly.

Alright this is it for now! Next up, I will sum up how Sitecore helped me solve an issue in regards to Index worker. Took us a good month or so, but, hey, it is now fixed. This is also very much so related to managed cloud, but, deserves a special blog entry. 🙂